I mean that makes sense, he gets killed and then Will Smith needs to solve his crime right?

Well if his experience with robots are his shitty little Roombas then sure. (Seriously don’t get Roombas, they’re outclassed by several other offerings)

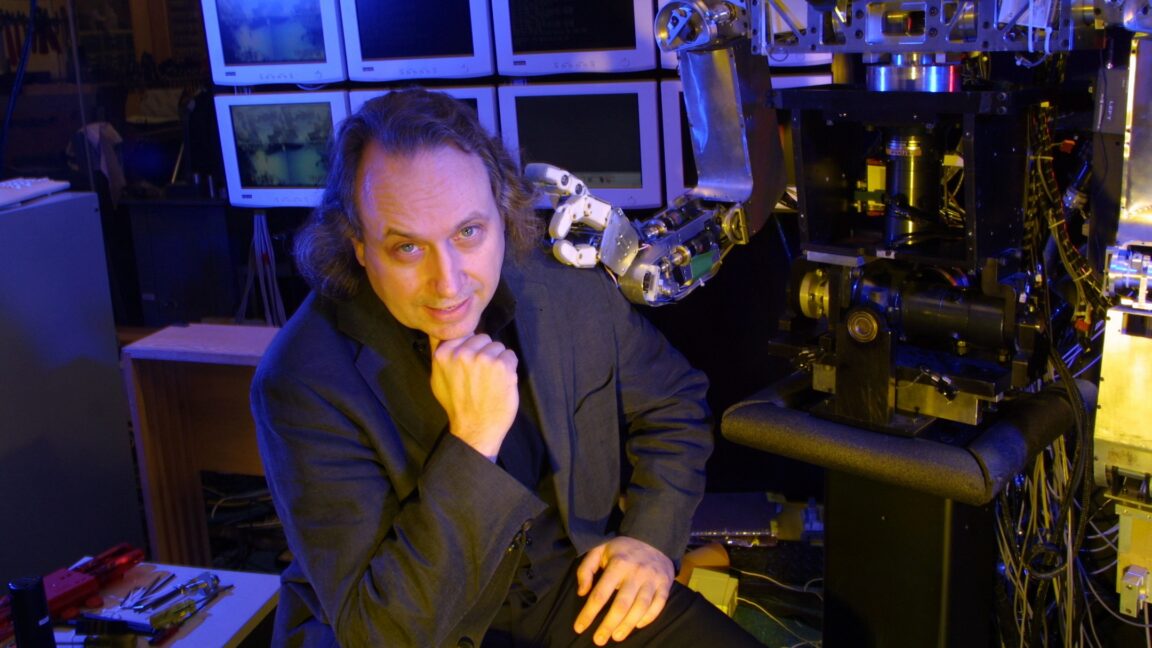

Your going to doubt this man’s opinion on robots ?

I haven’t read the article yet, but is it because robotics in general can be very dangerous, and proper industry safety practices require distance from machine with any physical capability to cause harm?

In other words, it’s just best practice common sense safety.

There was a Smarter Every Day video where this was briefly covered, I think it was the one in a shop that manufactures frisbees for Frisbee Golf. In the factory they have a ton of different robots doing different parts of the manufacturing processes. Several of the robots are in cages - these are the ones that could physically harm any-one/thing inside the cage - and other, more simple machines that are safe for humans to get close to.

If the robot is working correctly, it will be safe to be around. However you can’t be sure it will work correctly, for a variety of reasons, and as such you shouldn’t get within range. If you want a robot people can get close to, you need to limit its capabilities such that even if it fails it cannot harm people. Simple as that.

Edit1: Lmfao, when I’d only read the title for some reason I thought this was the guy behind the movie iRobot, not some robotics business - of course those robots wouldn’t be safe to be around…

Edit2: Yep, it’s basic safety around machines. If they machine has the physical strength to cause harm, you need to mitigate the risk of that happening by either keeping people away or limiting the capability to a safe level. Even if under normal operation it won’t cause harm, you need to consider failure scenarios.

You literally didnt read the article. Anyone who reads your post has no idea what the article was about.

I was doing some commercial work on regional Robotics industry research in the mid 2010s, it’s crazy how far things have come with companies using video data and simulations to program robots:

The crux of Brooks’ argument centers on how companies like Tesla and Figure are training their robots. Both have publicly stated they are using a vision-only approach, having workers wear camera rigs to record tasks like folding shirts or picking up objects. The data is then fed into AI models, which can imitate permutations of the motions in novel contexts. Tesla recently shifted away from motion capture suits and teleoperation for data collection to a video-based method, with workers wearing helmets and backpacks equipped with five cameras. Figure’s “Project Go-Big” initiative similarly relies on transferring knowledge directly from what they call “everyday human video.”

People who wear these cameras to feed into ai should make it a habbit to regularly give the finger to the task between steps. Also take dance breaks when no is watching.

I don’t know much about this stuff. But looking at it from a zoomed out view, it seems to me a repeat of tech history.

Just as grabbing the quickest option for profit at the time (the combustion engine), we ironically sent ourselves into an almost dark age period of technological advancement, one we’ll exit from much farther behind what could’ve been.

Or my lack of knowledge on this stuff is way off haha. Happy to be informed.

I think more likely this is a desperate attempt at gathering data to train AI, than it is an actual effective solution to the problem of controlling robots. Tesla and Figure think the emerging AI market will be more profitable than physical robots.

But even then, no matter how good the training is, you’re going to need to keep robots away from people. If it can physically cause harm, then you have to assume it will. Even if the software is good, you can’t be sure the software is configured correctly, not 100% of the time. Machines near people have to be physically limited in their capabilities.

There is no explicit legal limit here, however there are established industry practices across all manufacturing - where robots are used extensively. So you could technically put robots in front of people, maybe even get away with it, but if something happens you’d still likely be found liable for not taking appropriate safety measures.

A big issue is probably that we need to limit the ability of robots to less than that of humans. As well as being less desirable than making robots that perform better than humans, this also likely hits material limitations - metal parts are heavy, so servo motors have to move significant mass with significant force such that the mass of human body parts they collide with is negligible. To reduce the force to safe levels, everything has to be much lighter, which leads to fragility and other physical limits.

Damn. This is good point.

I’m thinking cars with this…

Even if the software is good, you can’t be sure the software is configured correctly, not 100% of the time. Machines near people have to be physically limited in their capabilities.

Depending where you live, generally cars are mechanically scrutinised—whether as much as annualy or as title as transfer of ownership or registration. Your local mechanic can do this and tick all the safety boxes.

Who’s doing that for the machine within?

Like, Bob’s Auto down the road can spot busted brake calipers in a second. I doubt they have software engineers as well.

There’s three options I can think of there…

- Manufacturer does it as an income source

- Software has self-diagnostics and we just accept that they are also 100% good

- Train up all the Bob’s Autos, but on whose dime?

Car software is a massive issue in many regards. Traditionally they’ve been terrible at it, many outsource to small outfits and the end product tends to be crap. Kind of like TVs, the software is bloated and the hardware (CPU and memory) is cheap. But all of it is proprietary, meaning you can’t reprogram them, and this is mandated by law in some parts such as engine management.

There is diagnostics, though, and some of it is standardised and has been for decades. Other parts are proprietary, but it is possible to gain access to some of it. Technology Connections just started a series on car stuff, and it starts with how the Engine Management Unit (or whatever Nissan call their car computer) handles the fuel injection mixture to continuously oscillate between rich and lean and ensure proper catalytic conversion of the exhaust while also monitoring the condition of the catalyst.

So yeah, you can maybe see why some of it should be locked down and difficult to interfere with, and why laws mandate this. If cars are supposed to meet emissions requirements, you have to ensure that somehow.

Unfortunately there aren’t as many laws requiring car manufacturers to open up other things in their software.

Bob’s Autos would probably have some sort of OBD tool for whatever vehicles he expected to work on. Car mechanics either keep up with the industry (and pay for access to some things) or they go out of business. And the cost is ultimately passed on to the customer.

When you get too close to the humanoid robot and it malfunctions:

deleted by creator