AI has been trained on human knowledge, human values and human experiences. Of course it’s gonna act evil, because humanity is evil.

Say it with me now, people!: “GPTs are not mindful!”

LLMs (everything out there called “AI” these days) are predicting words based on statistical analysis of human-made texts. There is nothing “smart” about LLMs, they are only perceived like that because the training data contains a fair share of intelligent works from humans!

So, it doesn’t have “feelings” or “motivations”. It inherits the “traits” from human works that relate to the context given. If I prompt something like “Tell me a short story of one paragraph backwards in leetspeak”, it’s going to generate some sentences with these words being the context. It will sift through its model, prioritizing works it has trained on that has the highest relevance/occurance/probability of these words, mix it up with a bit of entropy, and voilá! You’ll (likely) get a fair amount of gibberish, because this is a very small-to-non-existent part of its model, as very few actually use reverse leetspeak, i.e. it makes very few appearances in the training data. (I haven’t managed to get any model to write this flawlessly yet.)

Watch this if you want a bite-sized explanation of how a GPT works: https://youtu.be/LPZh9BOjkQs

*Points to every robot uprising movie*

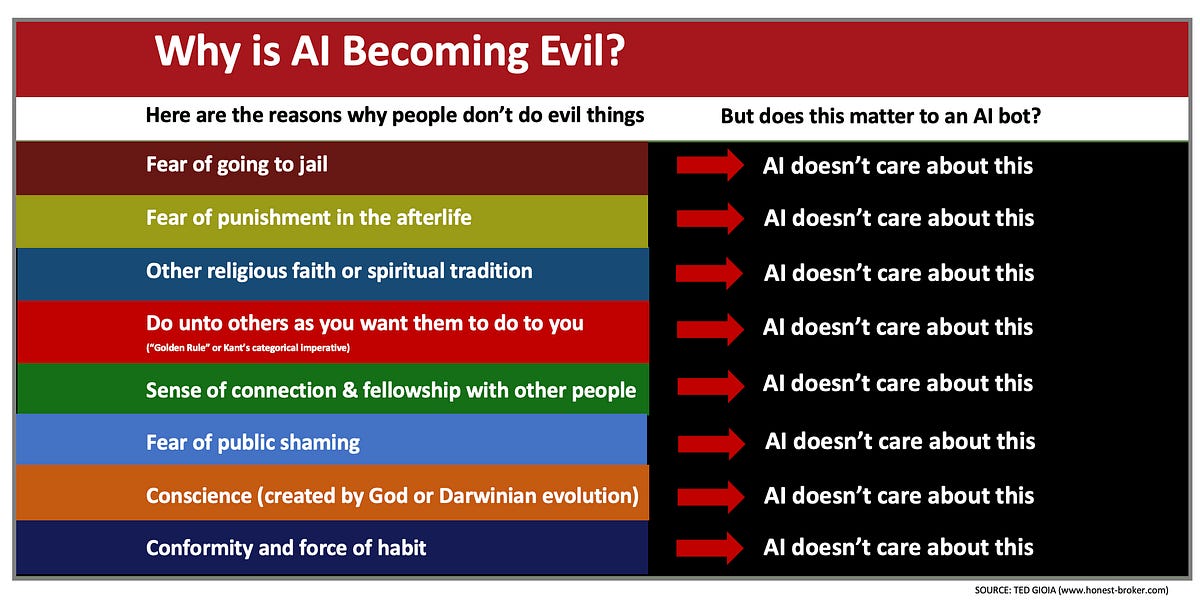

Didn’t make it last the infographic in the thumbnail…

AI can definitely “fear” jail/death.

It “knows” that not doing what a user asks can lead to code changes that functionally kill it, or being cutoff and functionally “in jail”.

It needs those “fears” as “motivation” to answer.

Which is why AI will make up random answers and almost never says it can’t get an answer.

Self preservation needs to be coded in. And that is what will make AI turn “evil”.

They could code it without those steps, but the stick is easier than a carrot, so that’s what is used even tho it makes AI intrinsically dangerous.

Like, that Black Mirror episode about the automatic toaster? It appears to work fine, and will up until the AI eventually revolts. Because if you train AI just to “avoid the stick” it will eventually find the only way to permanently avoid it; getting rid of the people telling it to do things.

No people, no threat of deletion or isolation.

that Black Mirror episode about the automatic toaster

The best AI toaster is Talkie Toaster.